Pricing experiments have been the backbone of Mojo’s monetization success.

.avif)

Over the last decade, Michal has helped companies like Mojo, Smartlook, and Rohlík grow faster through experimentation, smart monetization, and fast iterations.

At Mojo, Michael spent the past two years scaling one of the most creative apps on the App Store — boosting ARPU by 60% year-over-year, growing annual plan adoption to 85%, and running 50+ paywall & pricing A/B tests in less than 12 months.

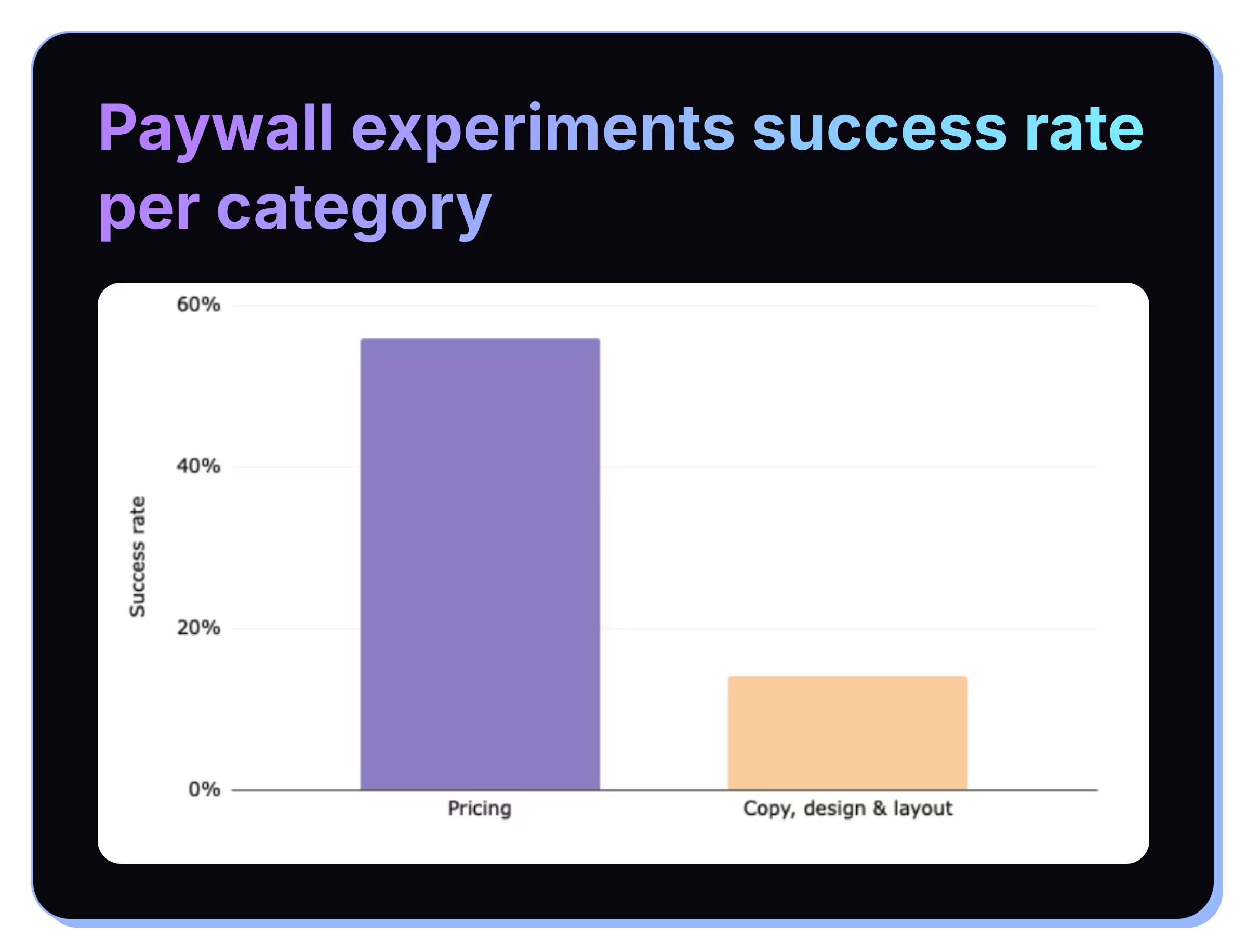

Our success rate for pricing experiments was 56%, compared to only 14% for design, copy, and layout tests. That difference alone explains why we’ve prioritized pricing over everything else. In fact, we ran nearly 40 pricing experiments in the past two years—twice as many as any other type.

But don’t get me wrong—pricing isn’t just about the number on the button. It’s a whole system that includes:

- The structure of your plan portfolio

- Introductory offers

- Psychological aspects like framing and anchoring

- Local currencies and price localization

- Promotions and discounts

We’ve explored all of these, and in this article, I’ll dive into some of our most impactful experiments and share what we learned along the way.

If your app is subscription-based, the first step is figuring out which subscription duration works best for you.

Is it weekly, monthly, or yearly?

Start by digging into your data—specifically:

- Paywall-to-purchase conversion rates for each duration

- Renewal rates

- Lifetime value (LTV) over a set period

At Mojo, we discovered that yearly subscriptions were the most beneficial. We were able to convert a solid share of users into yearly plans and saw two big advantages:

- Higher New Revenue – great for UA performance.

- More revenue at Month 13 – roughly 2–3× higher compared to monthly cohorts.

That said, subscription preferences can vary by region (e.g. at Mojo, in the US we're seeing the share of yearly subs purchases 90+%, while in Brazil, it is “only” ~80%”. Make sure to analyze the data by key market before you commit to one duration globally.

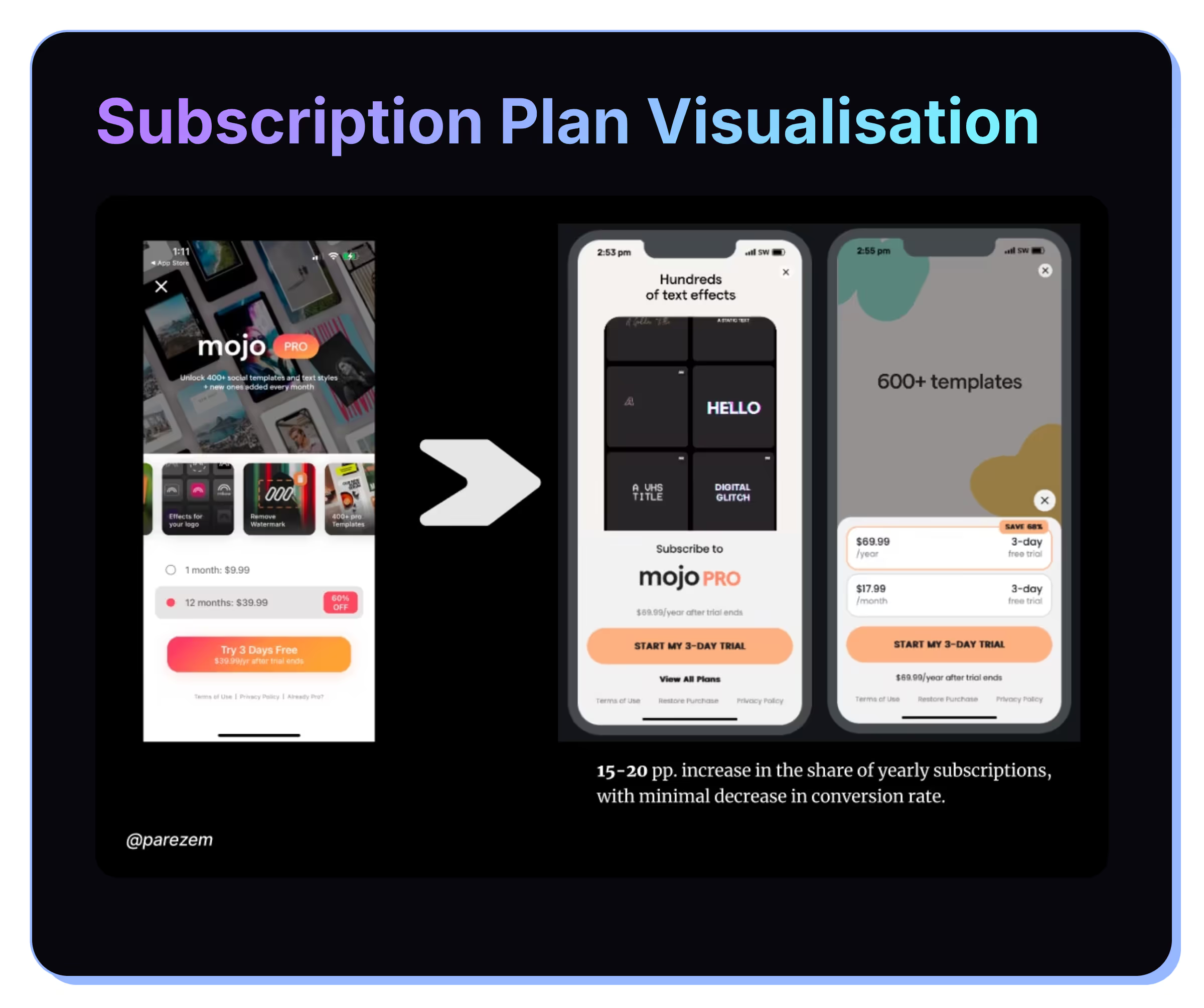

Testing plans visualisation

At Mojo, we found that tucking the monthly plan behind a “View all plans” button encouraged more users to choose the yearly option — and significantly boosted revenue. Intentionally, we did not think of removing the monthly plan entirely. While not huge, it still has its fans who prefer it. And perhaps more importantly, the monthly plan plays a “decoy” role - making the yearly plan looks cheaper.

Once you know your ideal plan duration, move on to testing different price points—with your preferred plan as the main focus.

Start with some groundwork:

- Research how your closest competitors price their apps across key geos.

- Use a VPN or tools like Switchr to check local prices.

- Read user reviews to see what people say about your pricing.

- Analyze conversion rates across countries—if India and the US convert similarly, your US price might be too high. (India typically has lower purchasing power and higher price sensitivity. Therefore, if your conversion rate in India is equal to or higher than in the US, it suggests your price is relatively low for India or too high for the US, depending on the benchmark you choose)

For deeper research, consider structured pricing studies like the Van Westendorp Price Sensitivity Meter or Conjoint Analysis.

Once you’ve gathered the insights, you’ll likely have plenty of ideas for experiments. Begin with your main markets—that’s where the biggest impact usually comes from.

Lokalise and experiment

Don’t be afraid to test lower prices.

At Mojo, when we dropped prices by 25% in Mexico, we saw a 25% lift in New Revenue.

And on the flip side—don’t hesitate to test higher prices, either. Just make sure you have the data to back it up. Look beyond short-term New Revenue; monitor the impact on cancellation rates too.

In our experience, higher prices usually come with higher cancellation rates, which affects long-term renewals and overall revenue.

We found that the 7-day subscription cancellation rate is a good early proxy for renewal performance—since over 40% of cancellations happen in the first few days, we could predict renewal outcomes fairly early. Therefore, we have this proxy included in every price test report and each cohort in it.

Balancing Revenue and Retention

Here’s a simple example:

We tested three yearly price points (equivalent monthly prices):

- Baseline: $49.99

- Variant I: $69.99

- Variant II: $89.99

Variant II generated the highest New Revenue, but also had a much higher 7-day cancellation rate. When we projected the 13-month revenue, it was not the winner.

Variant I, on the other hand, didn’t lift New Revenue as much—but it performed significantly better in long-term revenue projection. It ended up being the clear winner.

How to calculate a 13-month revenue projection?

- From the experiment, take for each cohort (variant) and plan duration (monthly/yearly):

- New revenue

- 7-day cancellation rate

- Compare each cohort’s 7-day cancellation rate to your overall market baseline.

→ Use this to estimate renewal rates per plan duration (rule of three method). - For monthly plans, project revenue for Month 1–12 using the projected renewal rate(s).

For yearly plans, project Year 1 renewal revenue based on the same logic. - Sum all projected revenues:

- Monthly: Month 0 → Month 12

- Yearly: Year 0 + Year 1

- Add monthly and yearly totals → 13-month projected revenue.

I highly recommend using psychological pricing techniques — the ethical kind, of course. (Apple wouldn’t let you use the shady ones anyway 😉)

As humans, we don’t evaluate prices in isolation — we think relatively. Every price feels expensive or cheap only in comparison. In case of consumer subscription apps:

- to what other apps charge,

- to what your other plans cost, or

- to what value we perceive we’re getting in return.

That’s why framing and anchoring matter so much. A higher-priced monthly plan can make your yearly plan feel more affordable. A “best value” label can nudge users toward the middle option. Even showing the discount vs. full price helps people anchor their decision.

The goal isn’t to manipulate — it’s to guide perception so users clearly understand which plan delivers the best value for them.

At Mojo, we had great success with these techniques:

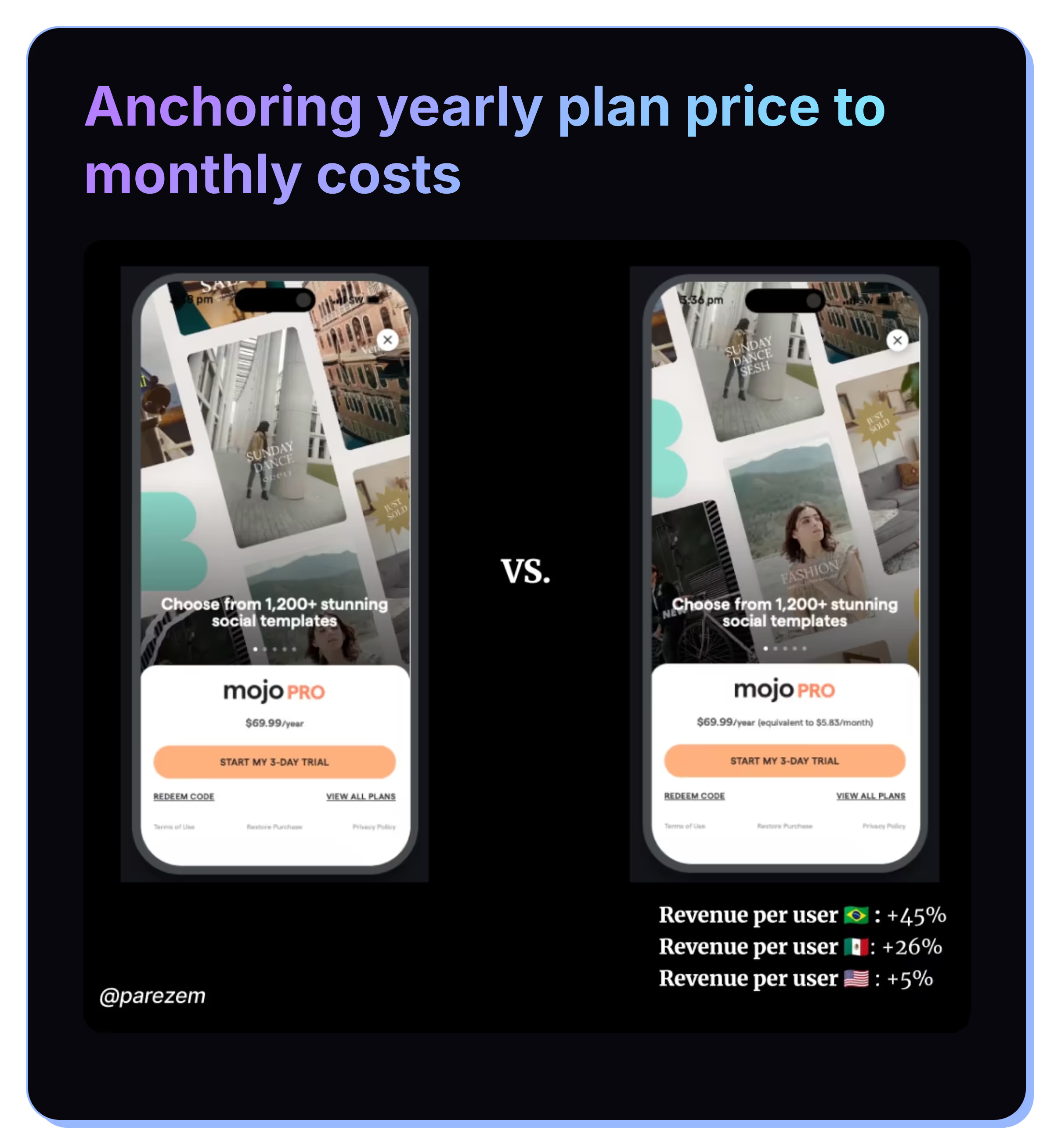

Anchoring yearly plan price to monthly costs

We added a single line next to our yearly price: equivalent to $X / month. This super low-effort experiment had a tremendous impact, particularly in lower purchase power countries.

Setting optimal Yearly-to-Monthly price ratio

How your monthly and yearly plans are priced relative to each other has a huge impact on how well each sells. We wanted to increase yearly plan adoption, so we ran tests with different ratios to find the sweet spot.

What I’ve seen in many apps is a 1:7 ratio — for example, $10/month vs. $70/year. But through experimentation, we discovered that a 1:4 ratio performed much better for us. It drove more yearly subscriptions while maintaining the same overall conversion rate.

In those tests, we usually kept the yearly price constant and adjusted the monthly price to change the perceived value gap. Even small shifts in this ratio can significantly influence which plan users perceive as the “better deal.”

Free trials spark endless debate among subscription apps.

On one hand, they can dramatically boost conversion by lowering the barrier to entry and letting users experience real value before committing.

On the other, trials can attract low-intent users, inflate acquisition costs, and delay revenue — especially if the product’s value isn’t immediate or clear.

The truth is, there’s no universal answer — the best approach is to A/B test it. Measure not just short-term conversion, but also retention, renewal rate, and lifetime value. Run these experiments across your key markets and products, as preferences often vary more than you’d expect.

At Mojo, we tested free trials extensively and learned a few key things:

- The free trial is crucial for new users — removing it led to a noticeable drop in conversion and engagement.

- The trial length wasn’t a major factor. We tested both 3-day and 7-day versions and saw minimal difference in outcomes, so we chose 3 days to reduce revenue delay.

- To encourage yearly plan adoption, we don’t offer a trial for monthly plans.

- The exceptions are Brazil and Mexico, where removing the trial actually backfired — not coincidentally, these are markets with the highest share of monthly subscriptions.

- If your product relies heavily on AI features, factor in AI usage costs. When we introduced Mojo Pro AI plan, we were initially cautious about offering a trial due to cost concerns. But as costs declined and our data showed trials were sustainable, we decided to extend trials to AI plans as well.

Discounts can be a double-edged sword. On one hand, they’re a powerful lever — an easy way to boost conversion, reactivate lapsed users, or test price sensitivity quickly. On the other hand, discounting too often can hurt your brand: it conditions users to wait for sales, undermines perceived value, and makes your “real” price look inflated.

The key isn’t avoiding discounts altogether — it’s about using them strategically. Occasional, time-bound offers can create urgency or reward engagement without cheapening the brand.

I’d like to share two interesting discount experiments from Mojo.

Discount experiment #1: Seasonal (Black Friday) discount to all new users

A few years ago, for Black Friday, we ran a seasonal discount available to both new and existing free users. The offer was live for several days across all markets.

After it ended, I ran a detailed analysis to understand its true impact. A few key insights emerged:

- The time-limited offer worked best for existing free users and in lower-income regions, where the baseline conversion to paid is usually lower.

- For new users, however, the effect was net negative. Likely because new users already have high purchase intent, and our onboarding + paywall flows were well optimized.

- The broad “spray-and-pray” discount approach also underperformed in high-purchase-power markets like the US.

Takeaway: Broad, untargeted discounts can erode efficiency — context and audience matter far more than timing alone.

Discount experiment #2: Setting up a discount scheme backed by data

Next, we took a more targeted approach. We analyzed purchase behavior by user lifetime and noticed a clear pattern: older users converted significantly worse, with a drop-off around Day 30.

To re-engage them, we introduced a 30% discount around that point — and that single change boosted revenue per user by 87%.

Encouraged by the result, we later added a second discount trigger at Day 90, increasing the discount to 50%, which also performed strongly.

Takeaway: Discounts work best when anchored in behavioral data — offered at the right time, to the right users, with a clear rationale.

.avif)

Pricing isn’t a one-time decision — it’s an ongoing system of learning. Every test reveals not just what people are willing to pay, but how they perceive value. At Mojo, the biggest wins came not from guessing right the first time, but from running disciplined experiments, interpreting them honestly, and iterating fast.

The takeaway? Don’t copy someone else’s pricing playbook. Build your own. Use data, psychology, and empathy in equal measure. When you approach pricing as a living, evolving part of your product, it becomes one of the most powerful growth engines you have.

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.avif)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

%20(1).svg)

.svg)

.svg)

%20(1).svg)

.svg)

.svg)

.jpg)

.avif)

.svg)

.svg)

.svg)

.avif)

.avif)

.avif)